- Google DeepMind has launched a new tool that can embed an “invisible” watermark to AI-generated images.

- The research division says that this is in the hopes of tracking AI-produced images that could be used to misinform and manipulate people online.

- So far only images made by Google’s Imagen software can feature the watermark and be tracked using the tool.

Since ramping up its efforts to catch up to Microsoft in terms of products that leverage generative artificial intelligence (AI), Google has again and again reiterated the need for the responsible use of AI.

In keeping with this objective, Google, through its DeepMind researcher division, has launched a new tool to embed digital watermarks in AI-created images. These watermarks will be invisible to the naked eye, existing only in the pixels of the image, and can be used to tell if a picture is AI-made, or not.

“AI-generated images are becoming more popular every day. But how can we better identify them, especially when they look so realistic?” the firm writes in a DeepMind blog post.

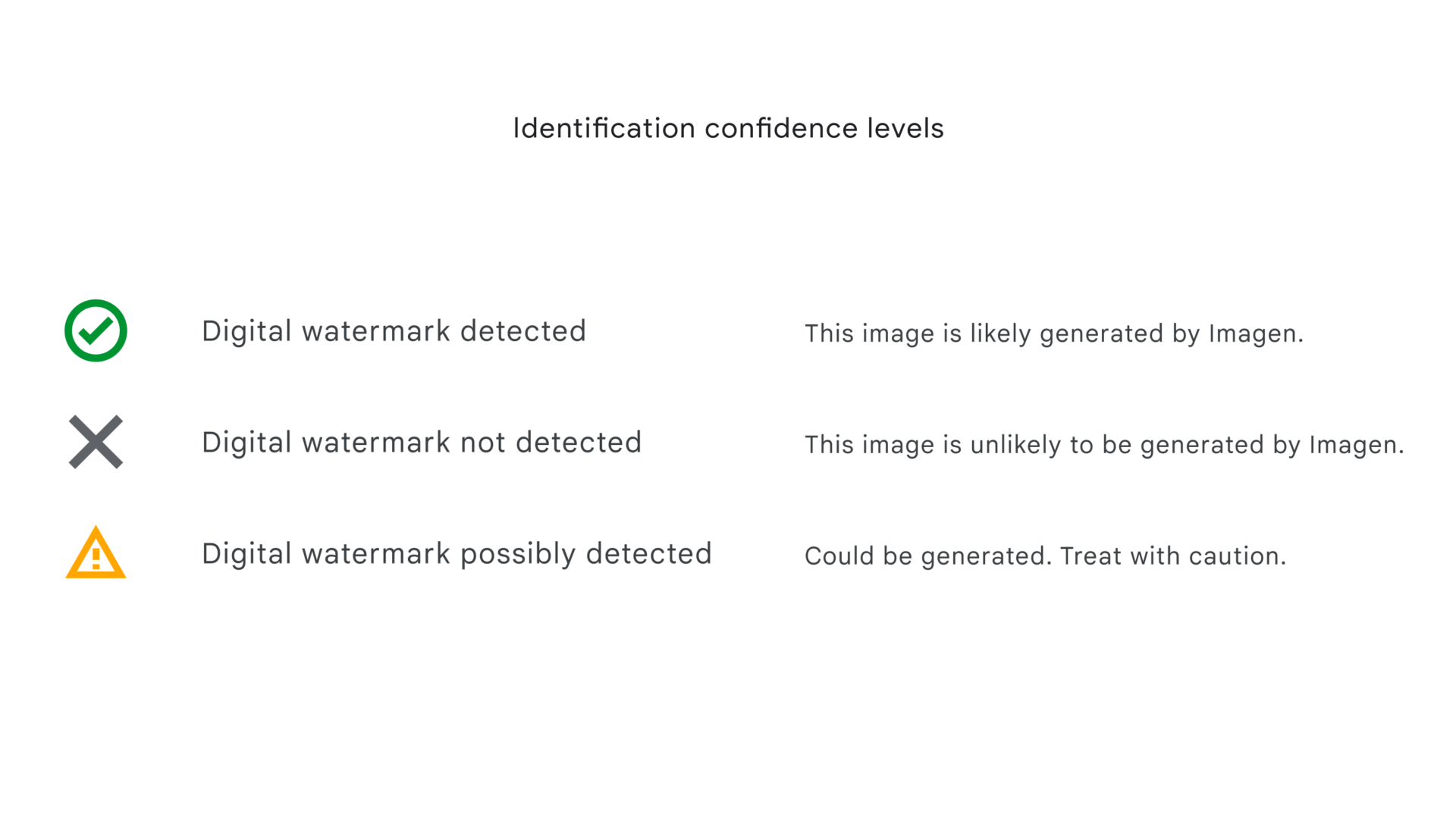

It claims the answer is SynthID, a new technology that “embeds a digital watermark directly into the pixels of an image, making it imperceptible to the human eye, but detectable for identification.”

“While generative AI can unlock huge creative potential, it also presents new risks, like enabling creators to spread false information — both intentionally or unintentionally,” the blog reads.

In March, photos began circulating on social media purporting to show former US President Donald Trump being arrested. These photos, which received enormous attention, millions of views and thousands of reposts, were fake and made using AI-powered software like StableDiffusion and MidJourney.

“Being able to identify AI-generated content is critical to empowering people with knowledge of when they’re interacting with generated media, and for helping prevent the spread of misinformation,” says Google.

SynthID leverages technology from Google Cloud, and was developed by the folks at DeepMind and Google Research as a sort of technical approach to how AI images can be traced and tracked in the future.

So far, the tool only extends to images created by Google’s Imagen software. Users can embed the watermark in the images they create on Imagen.

“We designed SynthID so it doesn’t compromise image quality, and allows the watermark to remain detectable, even after modifications like adding filters, changing colours, and saving with various lossy compression schemes — most commonly used for JPEGs,” it further explains, adding that two AI models are used to watermark images, and the identify these watermarks.

While not 100 percent foolproof as of yet, the tool could become a benchmark for future methods to detect AI-made images, which is becoming more crucial as image-generating software becomes exponentially more capable of producing realistic fakes.

“SynthID could be expanded for use across other AI models and we’re excited about the potential of integrating it into more Google products and making it available to third parties in the near future — empowering people and organisations to responsibly work with AI-generated content,” the blog concludes.

[Image – Charlie Warzel on Twitter]