Somehow without making a single public comment on the matter, Taylor Swift has managed to move Big Tech, forced X, the former Twitter, to make changes to its search function and is seeing US Congress look into tabling new legislation in order to deal with AI-generated nude images circulating online of the 33 year old singer-songwriter.

X has re-opened the ability to search for Swift on the platform after shutting it down last week.

“Search has been re-enabled and we will continue to be vigilant for attempts to spread this content and will remove it wherever we find it,” said Joe Benarroch, head of business operations at the Elon Musk-owned social media platform.

The ability to search for Swift was removed amid what is described as a “deluge” of fake AI-made images of the singer.

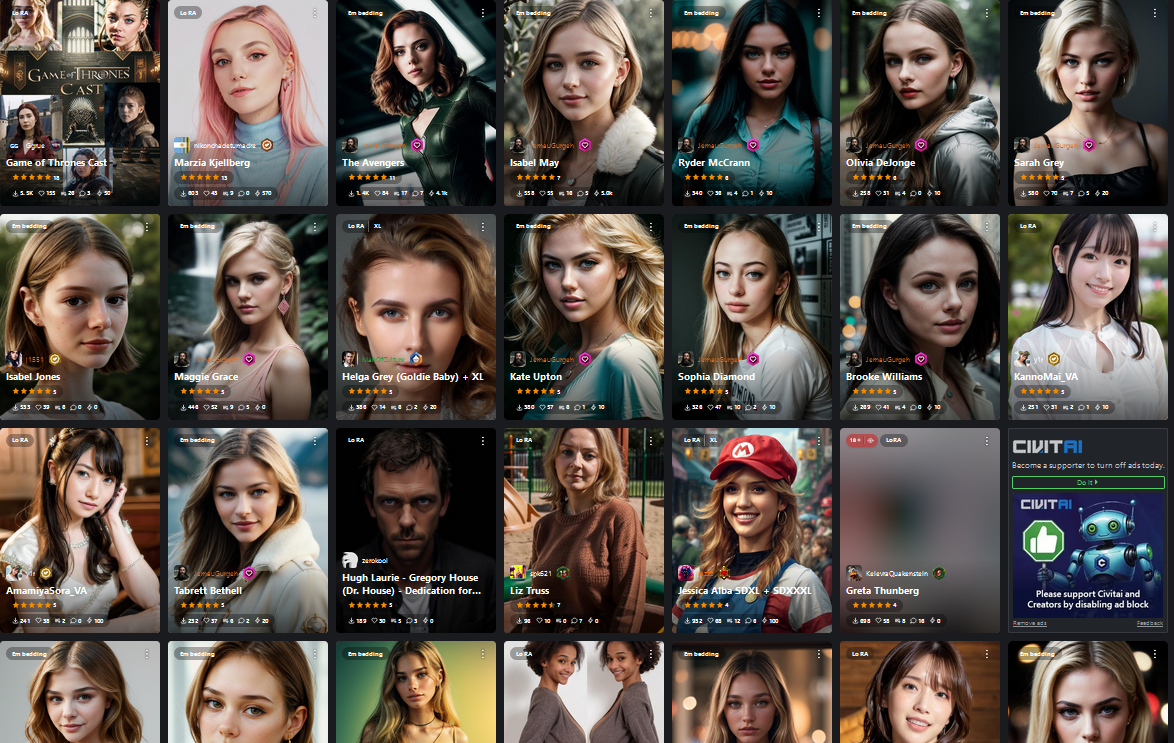

Swift is not the only celebrity that has faked porn images moving around online en masse. The phenomenon has been around since Photoshop and the internet were a thing at the same time, but with the advent of AI image generation, the problem has only grown. The largest and most popular StableDiffusion model website has its very own category for celebrity-trained AI.

You can find models to generate images based on a wide range of famous faces, from Scarlett Johansson to Hugh Laurie. They are allowed on the platform because, ostensibly, they are for safe for work purposes, but all it takes to generate non-consensual explicit content from one of these models is to type “nude” into the prompt box.

Last week Friday US Congress called fake nudes of Swift being shared online “alarming.”

“While social media companies make their own independent decisions about content management, we believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation and non-consensual, intimate imagery of real people,” said White House press secretary Karine Jean-Pierre.

US Congress is now proposing to pass the No AI FRAUD Act [PDF], which specifically mentions the Tom Hanks deepfake fraud incident, the Drake and The Weeknd “Heart on My Sleeve” song, and the generation of non-consensual explicit images of high school girls in New Jersey as incidents as reasons why the bill needs to be passed.

If passed, the bill would effectively ban the use of AI deepfakes to nonconsensually recreate another human being, at least in America. Despite the celebrities mentioned in the bill, it is Swift who has become the face associated with protections against AI deepfakes and nudes.

“We’re certainly hopeful the Taylor Swift news will help spark momentum and grow support for our bill, which as you know, would address her exact situation with both criminal and civil penalties,” a spokesperson for Joe Morelle, one of the politicians who are looking to pass the bill, told ABC News on the weekend.

You can expect politicians will continue to hinge their bets on Swift and her legion of adoring fans to bring the problem deeper into the mainstream, taking advantage of what has been termed the “Taylor Effect.” A direct reflection on the singer’s effect on collective behaviour. When Swift attends a football game, her mere presence in the crowd is enough to hand the NFL its highest-rated game of the week among female viewers – 24 million people tuned in, many looking for the celeb.

Another term “Taylornomics” was coined for the mass consumption Swift promotes by just being around. As the Wall Street Journal writes “When Taylor Swift comes to town, Swifties go on a spending spree.” Her global Eras Tour held last year was the first to gross over $1 billion. It was her throng of fans that first began reporting on the explicit images, and began uploading more positive pictures of Swift in defence.

The question is now if the Taylor Effect can help promote legislation against the abuse of generative AI, take it from the headlines and into the law. Digital artists have been fighting against AI-generated images for some time now, and SAG-AFTRA downed tools for several months in 2023 with concerns about AI supplanting humans a top-of-mind concern.

Swift has even managed to shake Microsoft, one of the world’s largest AI product vendors. CEO Satya Nadella said in an interview with NBC that explicit AI-generated images would necessitate “guardrails” to protect individuals from harm.

“I’d say two things. One is again, I go back to I think … what is our responsibility? Which is all of the guardrails that we need to place around the technology so that there’s more safe content that’s being produced and there’s a lot to be done there and not being done there,” explained Nadella.

[Image – Photo by Rosa Rafael on Unsplash]