- A new review of AI girlfriend simulator apps found that the majority are designed to steal your personal data.

- These apps will ask for your private information, photos, health details and more through the AI chatbot masquerading as the “perfect woman.”

- The companies behind the apps say they may sell this private information or share it in their terms of use.

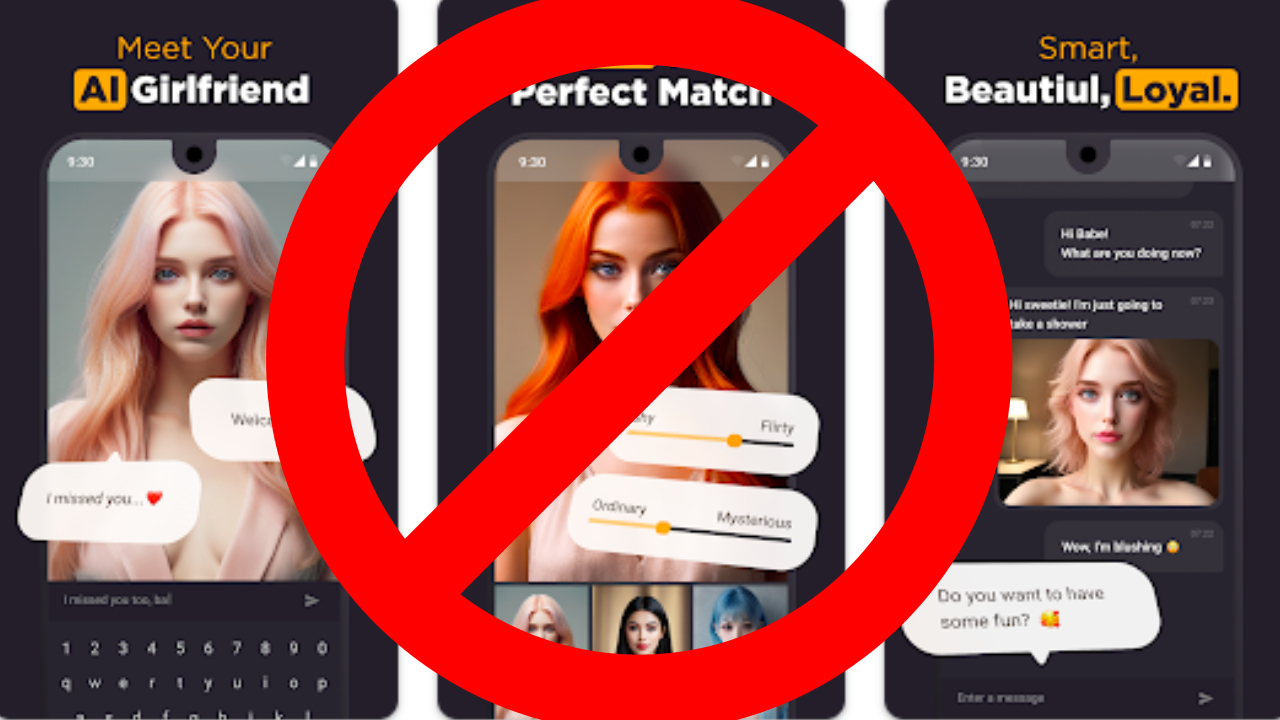

If you’ve been online recently, you have surely seen adverts looking to get you to download one of the many AI girlfriend simulator apps out there. These apps, when they’re not covered by malicious adware, allow you to create an avatar and then chat with a generative AI chatbot as if you were chatting with a real woman, or man in some cases.

They have grown in popularity since their emergence, fulfilling fantasies about having a relationship with the “perfect woman” one who is “smart, beautiful, loyal” and can chat with you about anything at any time, at least according to promotional material included in some of these apps.

However, it turns out they do more harm than first believed, with a study conducted by non-profit group The Mozilla Foundation, which reviewed 11 of these chatbots and found them all to be untrustworthy in terms of user data privacy.

“To be perfectly blunt, AI girlfriends are not your friends. Although they are marketed as something that will enhance your mental health and well-being, they specialize in delivering dependency, loneliness, and toxicity, all while prying as much data as possible from you,” explained Misha Rykob, a researcher at the Foundation.

Aside from mental health issues associated with using these kinds of apps, they are also being leveraged by their creators to collect data from users.

It seems these apps are designed specifically to collect private information, and the more personal the better. It turns out that while you’re answering all the personal and in-depth questions that your customised “perfect woman” is asking you (about your personal health, desires, wants, needs, behaviours) there is a company behind the scenes collecting it in droves;

One company says it collects “exhaustive information” from users in its privacy policy – which you have to accept to use the app – including “sexual health information”, “[u]se of prescribed medication”, and “[g]ender-affirming care information”.

Other apps will ask for pictures of yourself and your environment, and all but one of the apps reviewed said they may share or sell your personal data.

The study also found that the 11 apps were filled with trackers. An average of 2 663 trackers per minute while using the apps and chatting with the bots. Trackers are little bits of code that gather information about your device or use of the app, or even personal information like email addresses and internet history to share with third parties.

Usually these are to serve custom adverts to you, so if you tell your AI girlfriend you’re in the mood for a burger, and you suddenly see a bunch of burger ads, now you know why.

But wait there’s more: outside of the data privacy issues, these apps also come with content warnings. A few of the apps included characters descriptions involving themes of violence and underage abuse.

Several more apps in the review came with warnings from their creators that they could turn offensive, unsafe or even hostile towards the users. And even when there are warnings included, these apps offer little in the way of actual explanations on how they work.

“We have so many questions about how the artificial intelligence behind these chatbots works. But we found very few answers. That’s a problem because bad things can happen when AI chatbots behave badly. Even though digital pals are pretty new, there’s already a lot of proof that they can have a harmful impact on humans’ feelings and behaviour,” the review reads.

“What we did find (buried in the Terms & Conditions) is that these companies take no responsibility for what the chatbot might say or what might happen to you as a result,” the researchers add.

With all this in mind, it’s important to remember that these AI girlfriend apps are not the self-help, mental health-benefitting programmes they claim to be. They are data-sucking vampires.

And there’s only one way to deal with a vampire – that’s right, by practicing good cyber hygiene. The Mozilla Foundation advises that if you are using one of these apps, its best to start using a strong password, and keep the apps up to date.

You’re also better off limiting access to location, photos, camera, and microphone to these apps. And finally, make sure to clean up and delete any private information you’re worried these apps have taken from you or is vulnerable on your device.