- Facebook has announced that it is taking steps to crack down on the amount of spam that appears on users’ Feeds.

- It will lower the reach of accounts that routinely share “distracting content”.

- The Meta-owned platform also said it demonetise accounts deemed to be part of spam networks.

To address a problem, you first have to admit that you have one. In the case of Facebook, the Meta-owned platform has finally acknowledged that spam is a problem that it has turned a blind eye to in the past, but that is seemingly set to change.

In a blog post, Facebook explained that it will begin cracking down on accounts it believes to be sharing spam content that clutters up the Feeds of users.

“Facebook Feed doesn’t always serve up fresh, engaging posts that you consistently enjoy. We’re working on it. We’re making a number of changes this year to improve Feed, help creators break through and give people more control over how content is personalized to them,” it noted.

As part of its recent “OG Facebook” plan, the social media platform is launching an initiative to tackle spam more effectively.

“Some accounts try to game the Facebook algorithm to increase views, reach a higher follower count faster or gain unfair monetization advantages. While the intentions are not always malicious, the result is spammy content in Feed that crowds out authentic creator content.” it explained.

“Moving forward, we’re taking a number of steps to reduce this spammy content and help authentic creators reach an audience and grow,” it continued.

There are three areas in particular where Facebook is aiming to make a difference to the experience of users. These include:

- Lowering the reach of accounts sharing spam content,

- Investing to remove accounts that coordinate fake engagement and impersonate others,

- Protect and elevate creators sharing original content.

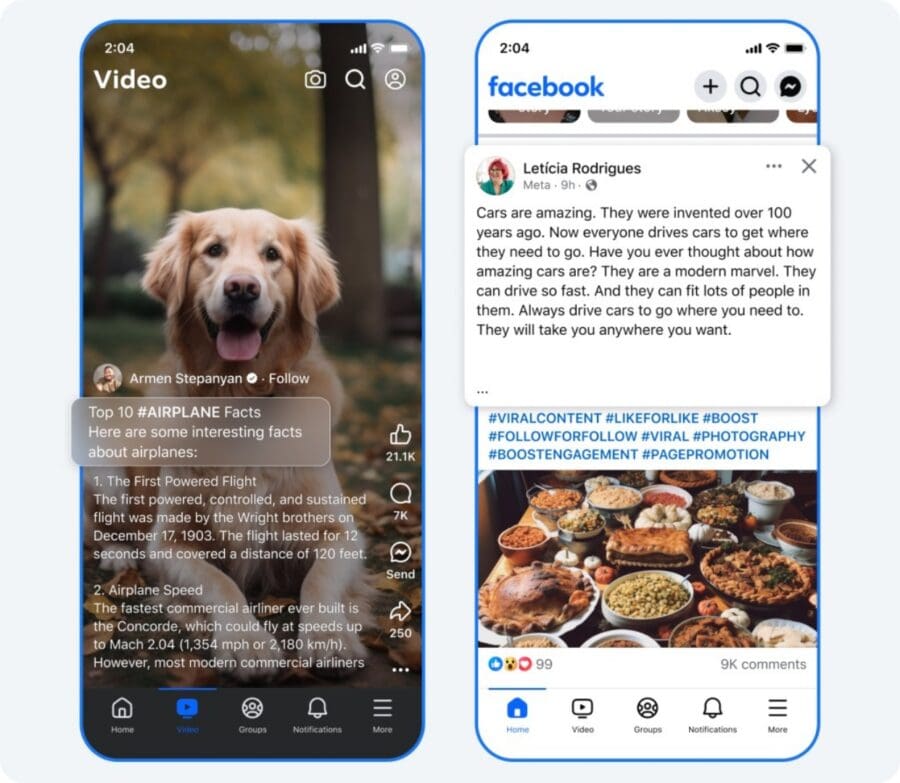

Unpacking the above a little further, Facebook said it will take action against accounts that engage in posting content with long, distracting captions, often with an inordinate amount of hashtags. Such accounts will only have their content shown to their followers and will not be eligible for monetisation.

“Spam networks often create hundreds of accounts to share the same spammy content that clutters people’s Feed. Accounts we find engaging in this behavior will not be eligible for monetization and may see lower audience reach,” it added.

“Spam networks that coordinate fake engagement are an unfortunate reality for all social apps. We’re going to take more aggressive steps on Facebook to prevent this behavior. For example, comments that we detect are coordinated fake engagement will be seen less,” it advised.

Having taken down over 23 million profiles that were impersonating large content producers in 2024, Facebook said it will employ more proactive measures this year to address impersonation. Here, more features are being added to Moderation Assist, which is Facebook’s comment management tool, to detect and auto-hide comments from people potentially using a fake identity. Creators will also be able to report impersonators in the comments, it confirmed.

Lastly, when it comes to accounts copying and pasting original content, the platform is working on more controls for creators to effectively combat this.

“The content that creators share is an expression of themselves. When other accounts reuse their content without their permission, it takes unfair advantage of their hard work. We continue to enhance Rights Manager to help creators protect their original content. We’re also providing guidance to help creators making original, engaging content succeed on Facebook,” it concluded.

We’re pleased to see Facebook acknowledge that it has a spam platform, as well as having some countermeasures in the works, but it remains to be seen how effective they will be in getting back to the OG experience it has been touting.