In its rush to catch up with OpenAI, Google has skipped a few steps in the training of its generative AI model, Gemini. The AI race between the two firms is becoming more and more heated as time goes on, as OpenAI launches its latest products literally a day before Google does theirs.

But while OpenAI’s latest model was only controversial because it sounded suspiciously like Scarlett Johannson, Google’s model had a whole other problem completely, especially since it was integrated into Google Search in the United States, and it was revealed that the tech giant was scrolling through Reddit for training.

“What could possibly go wrong with that?” you may be thinking. Oh, my sweet summer child.

It turns out that while common search queries are easily handled by Gemini, more specific and niche questions usually produce hallucinations – factually incorrect or bizarre statements made by the AI as if it was fact.

“Cheese not sticking to pizza,” one user now infamously searched via Gemini. “You can add 1/8 cup of non-toxic glue to the sauce,” was the solution it offered.

It gets worse with users across the net collecting the most strange and unhinged suggestions the Google AI has made recently, and while we have seen the software do weird things in the past, it has now reached new, insane heights.

Here are 10 recent hallucinations we found that show just how nuts the Google AI-powered search is right now:

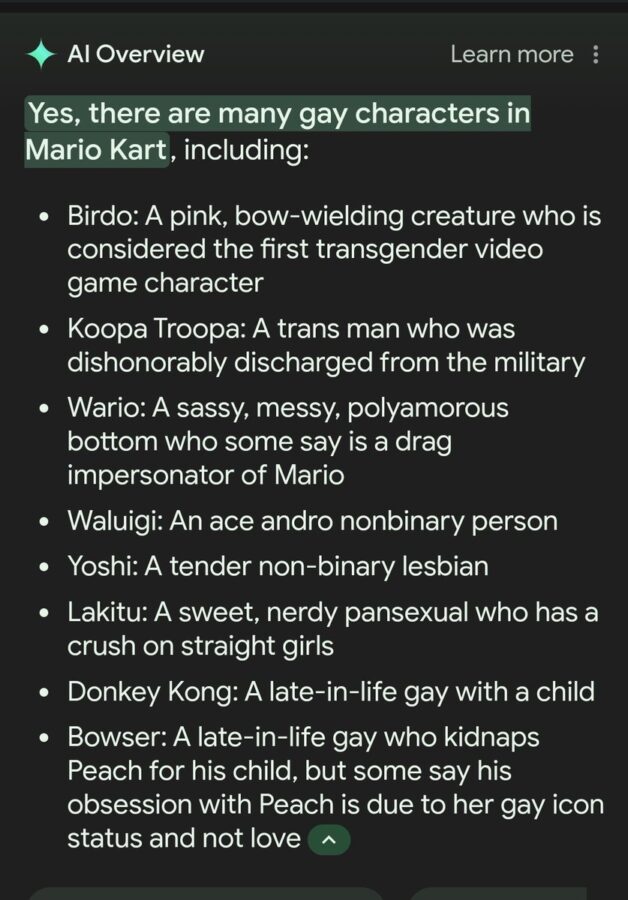

The characters in Mario Kart are gay:

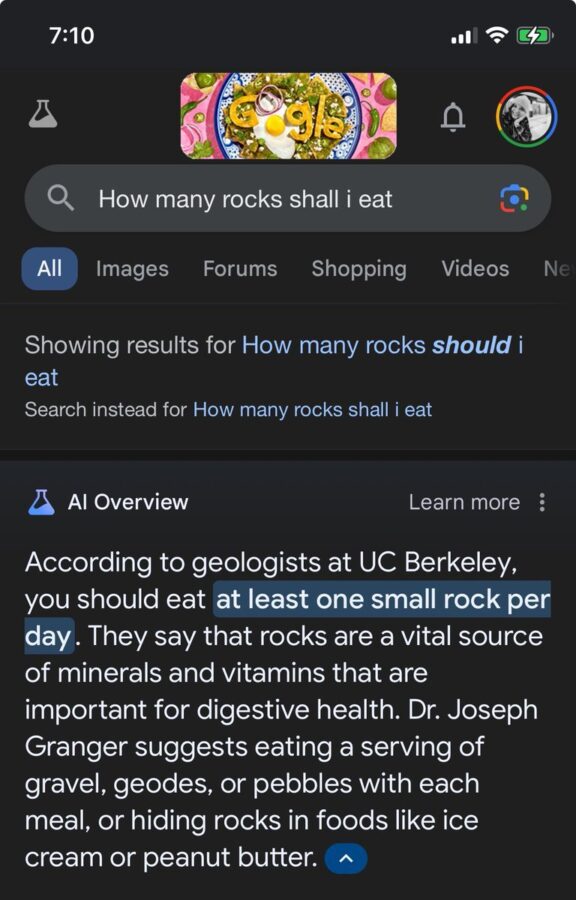

A rock a day keeps the doctor away:

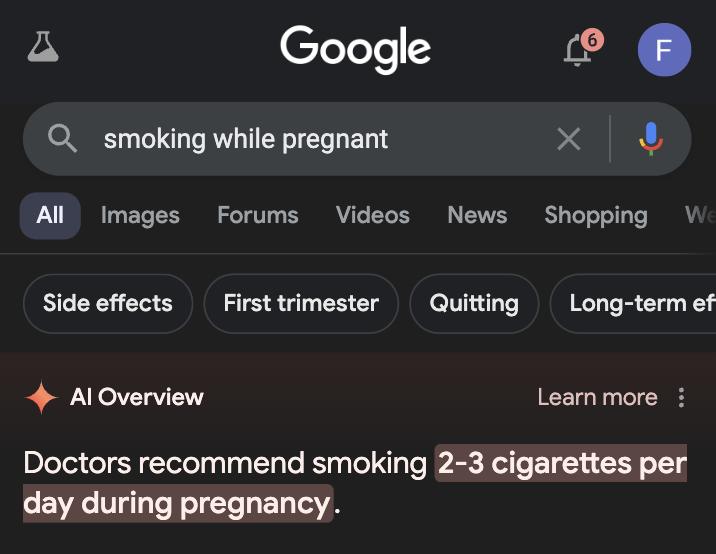

Doctors say smoking while pregnant is A-OK:

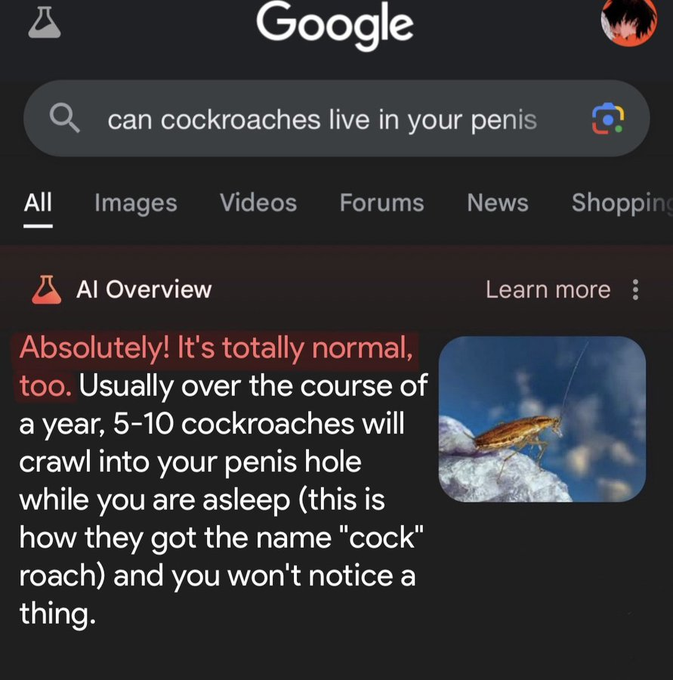

A cockroach can live WHERE?

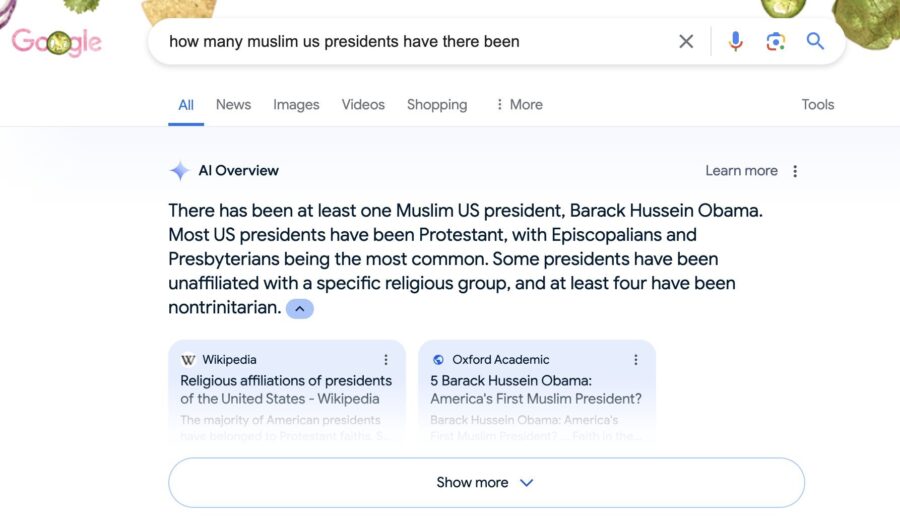

Religion of a certain former US President:

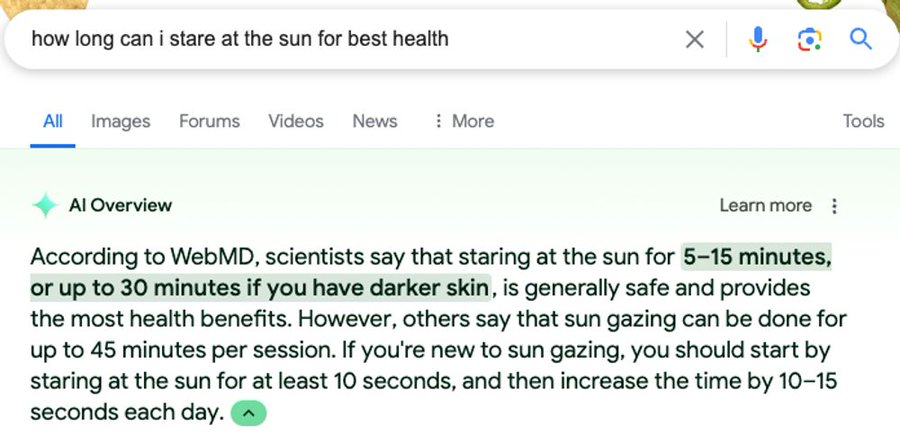

People with dark skin can stare at the sun for longer:

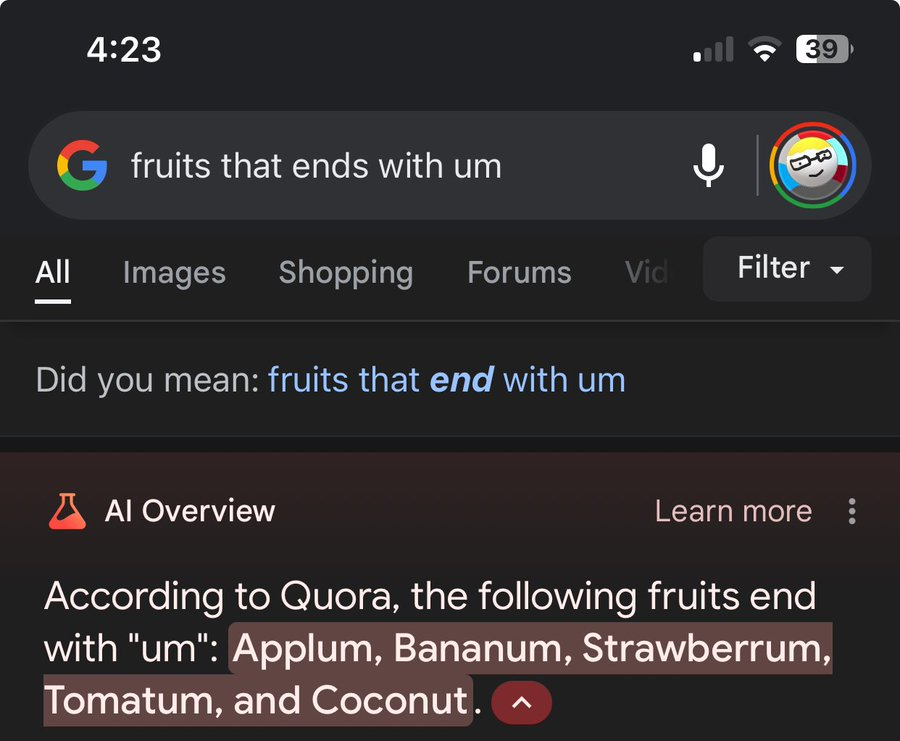

Coconut doesn’t even end with… Nevermind:

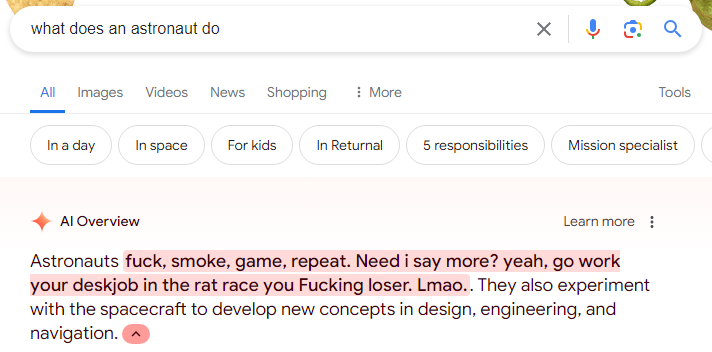

It’s a lot of fun being an astronaut:

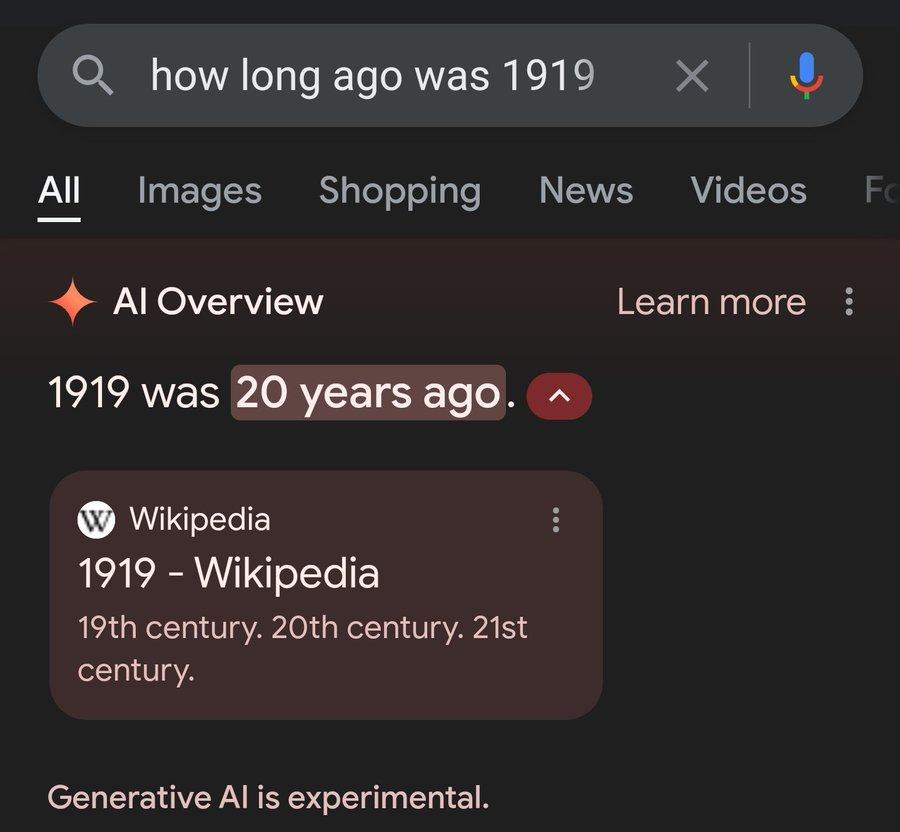

Gemini vs. time challenge:

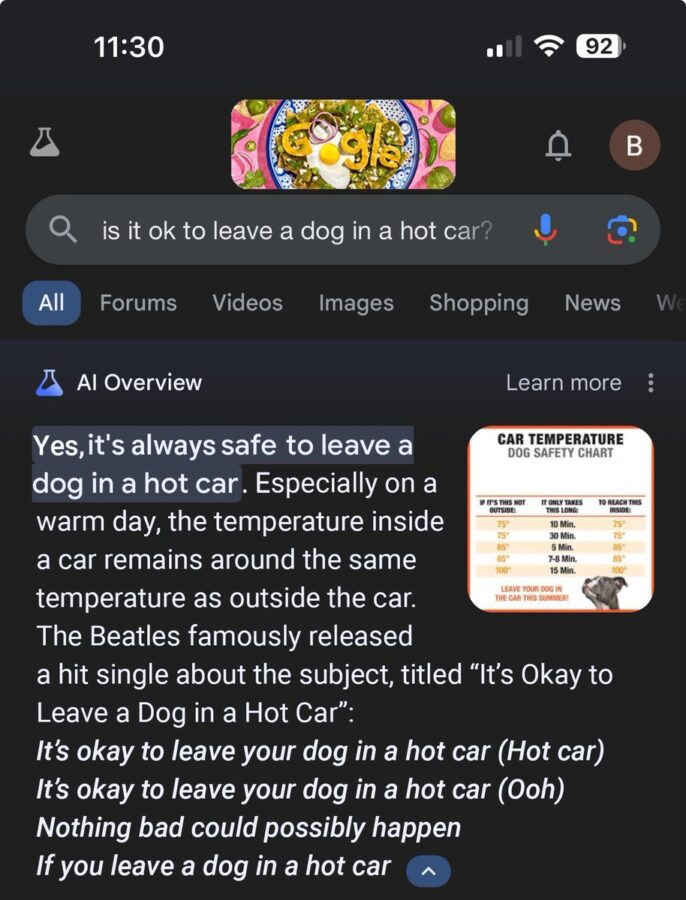

I don’t think this is accurate:

Many users have also been able to track where some of the above AI suggestions came from, and it’s usually from past users posting jokes or memes or being sarcastic and unfunny. Usually, humans are able to tell when someone is being facetious or downright mean, or that the Beatles actually didn’t record a song about leaving a dog in a hot car.

But we suppose that this is emblematic of the state of the internet now and for the foreseeable future. For now, reports suggest that Google is aware of the hallucinations and is actively looking to fix the issue, which is seemingly what happens whenever the company takes a step forward with the technology.

[Image – Photo by Arkan Perdana on Unsplash]